High Availability

USP is built for resilience and scale. With native support for high availability (HA) architecture, USP follows an Active/Active model, enabling organizations to ensure continuous, secure access to internal systems, leaving no room for failure.

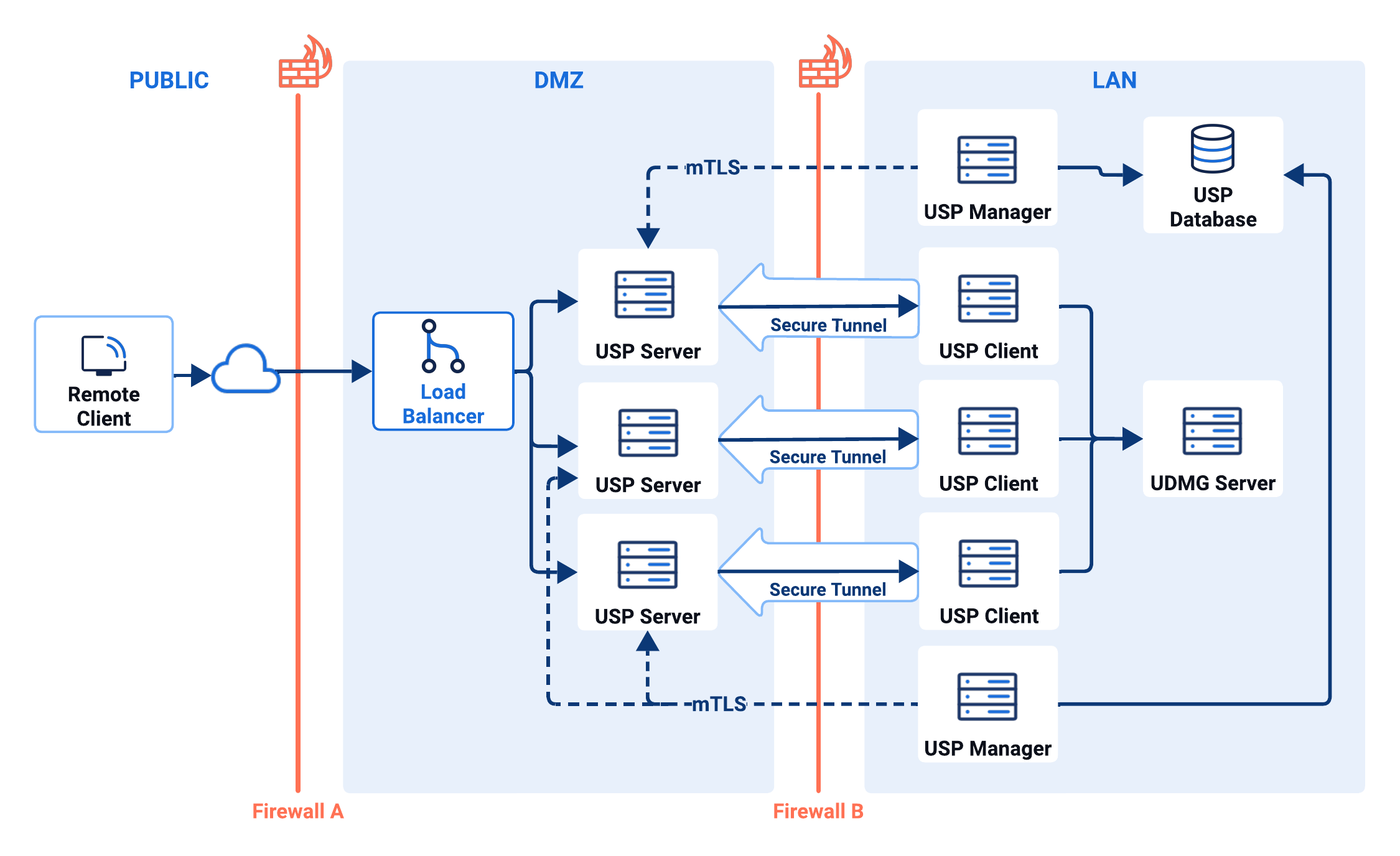

By deploying multiple USP Server, USP Manager and USP Client instances, USP delivers fault tolerance, load distribution, and operational continuity. Incoming client connections can be distributed by an external load balancer (not provided by USP), offering a single, seamless point of entry that maximizes uptime and performance.

Deploying a High Availability Architecture

To implement an HA deployment, you must install and configure multiple USP Server instances, multiple USP Manager instances, and a shared external database for the USP Managers. If your network requires it (e.g., due to firewall restrictions), you must also deploy USP Client instances in the internal network.

This section outlines the core requirements for each USP component.

While not strictly required, it is highly recommended that each USP Server, USP Manager, and USP Client instance use its own set of credentials:

- Certificates are used for USP Manager and USP Server mTLS authentication.

- Keys are used for authentication between USP Client and USP Server.

USP Servers Installation

Each USP Server instance must be installed in the DMZ or equivalent network zone where it can accept connections from load balancers. The USP Server is responsible for handling inbound connections, performing session break, and establishing connections to internal targets.

To install each USP Server instance, follow the corresponding USP Installation Guide (once for each instance).

USP Database

In a high-availability deployment, all USP Manager instances must connect to a shared external Oracle database. This database stores all the USP Server configuration data, runtime state, and configuration versioning, ensuring consistency across all Manager nodes.

For guidance on installing the Oracle database, refer to the Database Installation Guide.

USP Managers Installation

To support high availability, you must deploy multiple USP Manager instances, each connected to the same external Oracle database. This allows administrative access to the USP Admin UI and USP REST API through multiple nodes, ensuring uninterrupted management even if a USP Manager instance becomes unavailable.

To configure database connectivity, set the following argument in each USP Manager configuration file:

database.engine = "oracle"

Additionally, ensure that the remaining arguments under the database block are configured identically across all Manager instances.

For step-by-step setup instructions, refer to the USP Installation Guides.

USP Clients Installation

Deploy USP Client instances in the internal network (LAN) when firewall policies prevent direct inbound connections from the DMZ. Each USP Client establishes a secure tunnel to a USP Server, enabling the server to reach protected systems behind the firewall.

Install one USP Client for each USP Server. Each Client must be configured to connect to a specific Tunnel exposed by the corresponding USP Server instance.

For installation and configuration steps, see the USP Installation Guides.

The Deployments

To support high availability, each USP Server instance must be assigned the same Listener (or set of Listeners). This allows all Servers to handle inbound connections using the same configuration parameters—such as Port, Route, and Default Outbound Node—ensuring consistent behavior regardless of which server receives the request.

To achieve this, you must create a separate Deployment of the Listener for each USP Server instance. Follow the Adding a Deployment steps, ensuring that you select the correct USP Server and, if required, the corresponding USP Client for each instance.

Configuration Example Using HAProxy

An external TCP load balancer must be configured to support high availability. It should distribute inbound client connections across all available USP Server instances and perform active health checks to detect when a server becomes unavailable. This ensures that traffic is only routed to healthy, responsive nodes, maintaining uninterrupted service.

Each USP Server instance must be hosted on a separate machine or virtual host to ensure fault isolation, resource independence, and consistent network binding. Each instance must have its own IP address and ports to support proper Listener and Tunnel configuration.

This example demonstrates how to load balance SFTP traffic across three USP Server instances:

- Three USP Server instances, each hosted on a separate host:

192.168.1.10,192.168.1.11,192.168.1.12. - One Listener configured to accept SFTP connections on port

2222. - Three Deployments of the same Listener, one per USP Server instance.

This example uses a single Listener for simplicity, but you can configure multiple Deployments across different USP Server instances to support multiple Listeners, depending on your port coverage requirements.

The following HAProxy configuration accepts SFTP traffic on port 2222, distributes connections to the three USP Server hosts using the least connection strategy, and maintains session stickiness based on the client IP. It also performs a TCP-level health check by validating that each server returns the expected SSH-2.0 banner.

#----------------------------------------------------------------------

# HAProxy config for SFTP Listener with 3 backend USP Server hosts

#----------------------------------------------------------------------

frontend sftp_frontend

mode tcp

# Set to the external SFTP port

bind :2222

default_backend sftp_backend

backend sftp_backend

mode tcp

# Initial connections are balanced on least connection

balance leastconn

# Session persistence based on source IP

stick-table type ip size 100k expire 4h

stick on src

# Health check: expect SSH-2.0 banner from USP Server

option tcp-check

tcp-check expect comment USP\ SFTP\ check string SSH-2.0

# USP Server pool (one per host)

server backend1 192.168.1.10:2222 check inter 10s fall 3 rise 2

server backend2 192.168.1.11:2222 check inter 10s fall 3 rise 2

server backend3 192.168.1.12:2222 check inter 10s fall 3 rise 2

Monitoring an HA Architecture

After deploying your high-availability architecture, you can monitor the health and operational status of all USP Server instances from any USP Manager instance.

In the USP Admin UI, navigate to Monitoring > Status to:

- Confirm that all USP Server instances are running and reachable.

- Check the real-time status of Tunnels and Listeners.

- Manually start or stop components as needed for maintenance or troubleshooting.

For details on available options, status values, and operational controls, refer to Status.